Defending Against LST Monopolies

Co-authored by Callum Waters, NashQueue, Jacob Arluck, Barry Plunkett, and Sam Hart

any dumb ideas are my (Evan’s) fault, any good ideas in this post are either directly stolen from or would have been impossible to formulate without the immense amount of discussion with my colleagues at Celestia Labs, Osmosis, Iqlusion, Skip, and the rest of crypto twitter. This post kneels on the shoulders of PoS giants who don’t even need their last names mentioned, such as Sunny, Dev, Zaki, Bucky, Jae, Sreeram, Vitalik, and countless others. S/o to Rootul Patel for editing!

Liquid Staking Tokens (LSTs) set in place incentives to establish an external monopoly over the validator set. Such a monopoly threatens to exploit the native protocol and its participants for an undue portion of profits while making attacks significantly cheaper.

By combining two existing mechanisms in the same protocol, any third party can fork and instantly convert an existing LST, redirecting any value capture. This ability dramatically lowers the switching costs, encouraging competition and providing recourse to users in the event that an LST is capturing too much value. Essentially, native protocols can defend against LST monopolies by lowering the cost to vampire attack the LST protocol.

The two mechanisms are:

- In-protocol Curated Delegations

- Dynamic Unbonding Period

The first mechanism significantly lowers the barrier for third parties to create their own LST by adding in-protocol tools. The second changes the unbonding period to be dynamic and determined by the net rate of change of the validator set. This allows for the instant conversion between identical LSTs while also increasing the cost of existing attack vectors.

In-protocol Curated Delegations

One mechanism to create LSTs is described here, however any will work. Feel free to skip to the next section if you already have a mental model for them.

As summarized elegantly by Barry Plunkett:

LST protocols fundamentally do two jobs:

- Validator set curation

- Token issuance

Curated subsets of validators are useful because delegators can easily hedge slashing risk and staking profits, while also selecting for broader more subjective preferences such as decentralization, quality of setup, or to fund specific causes.

Delegations can be made partially fungible by swapping the native token for validator specific tokens. This is identical to normal delegation, except that delegation can be transferred to a different account just like the native token. Each validator token is fungible with itself because it represents identical slashing risk.

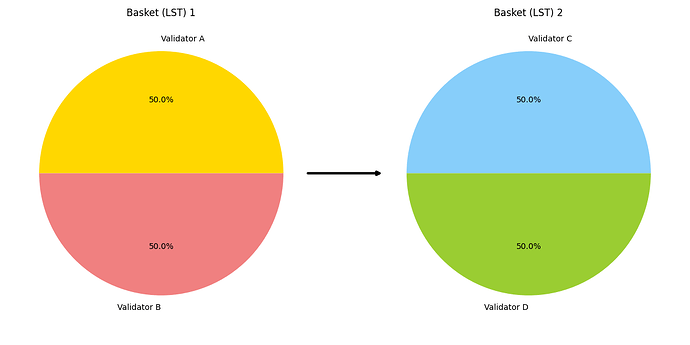

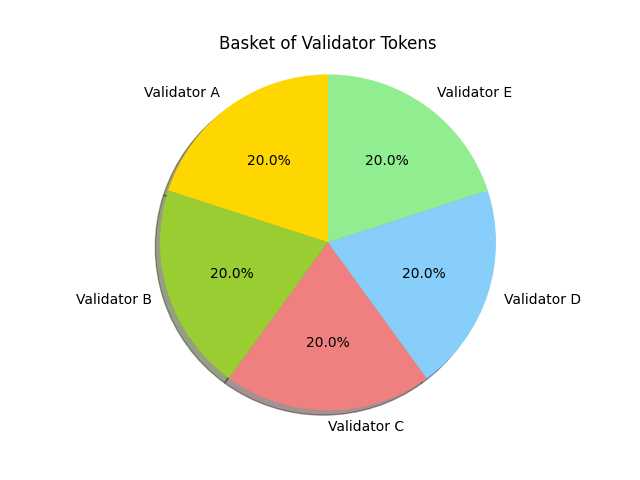

For a user to mint a new LST, the protocol uses a predefined validator subset to mint validator tokens. Then those tokens are wrapped together to form the LST. An LST is just a “basket” of validator tokens.

To create a new LST that had five validators all with equal ratios of voting power, a third party would pass the validators and their respective ratios in a tx. This would result in a simple basket token that looks like this

If a validator gets slashed, then there are fewer native tokens that collateralize their validator tokens, and therefore their validator tokens are worth less. However the ratio of validator tokens stays the same. For example, assume there is a basket with equal ratios of two validators and one of the validators gets socially slashed entirely. The basket token is still worth the same number of validator tokens, however now the validator tokens of the validator that got slashed have no native token behind them, and therefore the basket token is now only worth half of what it was before it got slashed.

The key to understanding how third parties can update the composition of the basket and how conversion between different LSTs works, we must first understand a new unbonding mechanism.

Dynamic Unbonding Period

Even if protocols don’t want to add native curated delegation tooling, they need to change their unbonding mechanism to account for the existence of LST protocols.

LSTs make it significantly cheaper to attack the native protocol. To change to a malicious validator set, attackers no longer have to use the native token. Instead, they just have to attack the LST protocol.

This is incredibly problematic, because stakers are incentivized to use an LST, and its impossible to stop them from doing so. By this same logic, it’s also impossible to create an LST protocol that makes attacks expensive.

Since users are incentivized to use LSTs and LSTs effectively enable an attacker to avoid slashing that would normally occur in the unbonding period, native protocols need to rethink the mechanisms to make attacks more expensive.

Any new mechanism to make attacks more expensive MUST be enforceable by the protocol. If a mechanism can be bypassed by wrapping a token and if users are incentivized to do so, then they will bypass it (note that not all co-authors agree on this point). One such mechanism that can be enforced is to limit the rate of change of the validator set.

If it is true that LSTs allow for attackers to bypass slashing during the unbonding period, and that native protocols can make attacks more expensive by limiting the rate of the validator set, then it makes sense to change the unbonding period to be based on the rate of change of the validator set. Instead of their being a flat period of time for each unbond, the time can be dynamic depending on how much of the validator set has been changed.

Making Attacks More Expensive by Rate Limiting the Change in the Validator Set

Since networks can’t stop stakers from using LSTs, they need some other mechanism to increase the cost of an attack. They can do this by putting a constraint on the rate of change in a validator set.

The longer it takes to change the validator set, the more expensive it becomes to attack the network. Limiting the rate of change of the validator set does not guarantee that attacks are not profitable, it only makes them less profitable.

For example, assume there is an LST protocol that determines the native protocol’s validator set via token voting with its own separate token. Attackers could borrow the LST protocol’s token, change the validator set of the native protocol, and then return the borrowed LST protocol tokens. Currently, this attack could in theory occur in a single block.

If the rate of change of the validator set is constrained, then, assuming there is premium charged for borrowing the LST protocol’s tokens, the attacker at least has to pay more. Again, this does not guarantee that attacks are not profitable. It simply makes them less profitable. PoS is arguably still fundamentally flawed.

Unbonding Queue Logic

Limiting the rate of change of the validator set can be achieved by using a queue. Each block, the queue is processed until the rate of change has exceeded the allowed limit. If a given change does not increase the rate of change of the validator set, then the queue is bypassed and that change is applied immediately.

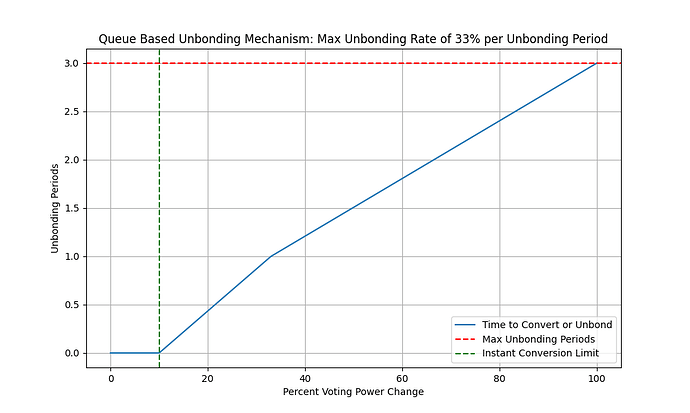

The exact curve that determines the rate of change of the validator set is a bit arbitrary, and not discussed thoroughly in this post. This requires extensive analysis. In this curve, if the validator set is changed less than 10% for the last unbonding period, all conversions and unbondings are instant. Once that threshold is passed, conversions and unbondings enter the queue.

Therefore, there does not need to be a flat timeout that all stakers wait. If the validator set has not changed significantly over the unbonding period, then the queue will be empty and delegators will be able to safely unbond within a single block. After they unbond, the rate of change is increased. If the validator set has changed too rapidly over the course of the unbonding period, then the delegators will not be able to unbond instantly. Instead they will be appended to the queue, and their unbonding will be processed eventually.

One potential curve is plotted below for demonstration purposes only. It is not proved to be secure.

Here’s some very crude psuedocode that hopefully clarifies the high level queue logic. In this example, each change to the validator set gets “compiled” to a Change struct. For example, if a delegator wants to unbond, or convert between LSTs.

// Change represents a change in the validator set. This can be compiled from

// various actions such as redelegating, converting between LSTs, changin the

// curation of an LST, etc.

type Change struct {

// addr is the address of the issuer of the change. This could be an an individual delegator

addr Address

// VotePowerDiff details the voting power changes per validator

VotePowerRatio map[Validator]big.Int

}

// ProcessChange depicts the the very high level logic applied to each change as

// soon as it is received. If the change results in a decrease in the rolling

// diff of the validator set, it is immediately applied. If the change increases

// the rolling diff, it is added to the queue.

func ProcessChange(valset []Validator, queue []Change, rateOfChange Rate, c Change) (valset []Validator, queue []Change, rateOfChange Rate) {

// calculate the percent change in the validator set

delta := percentChangeInValset(valset, c)

// immediately apply the change if it is negative or equal to zero

if delta <= 0 {

// apply the change to the validator set

valset = ApplyChange(valset, c)

rateOfChange.Add(rateOfChange, delta)

return valset, queue, rateOfChange

}

// add to the change to the queue if it cannot be immediately applied

return valset, append(queue, c), rateOfChange

}

// ProcessQueue is ran each block to empty the queue. It continually applies changes until

// the max rate of change is reached or the queue is empty.

func ProcessQueue(valset []Validators, queue []Change, rateOfChange Rate) (valset []Validator, queue []Change, rateOfChange Rate) {

// continue to apply changes until the max rate of change is reached or the queue is empty.

for len(queue) > 0 {

// get the first change in the queue

c := queue[0]

// calculate the percent change in the validator set

delta := percentChangeInValset(valset, c)

// check the effect of the change. If max rate of change is reached, break

if rateOfChange.Add(rateOfChange, delta).GTE(MAX_RATE_OF_CHANGE) {

break

}

// apply the change to the validator set

valset = ApplyChange(valset, c)

rateOfChange.Add(rateOfChange, delta)

queue = queue[1:]

}

return valset, queue, rateOfChange

}

Besides improving the security of the network, the second main benefit of this new unbonding mechanism is the ability to minimize the time it takes to convert one LST to another. This is an identical problem to unbonding, and therefore must be constrained by the same rate and added to the same queue.

Conversion Between Different LSTs

Similar to unbonding, the conversion between two LSTs is regulated using the same queue that is constrained by the percent change in the validator set.

For example, if the total voting power is 100tokens, and the max rate of change over the unbonding period is 33%. The maximum number of LST tokens that could be converted to a completely different LST within the first unbonding period would be 33tokens. In order for the rest of the 67tokens to be converted, the stakers would have to wait a total of 3 unbonding periods.

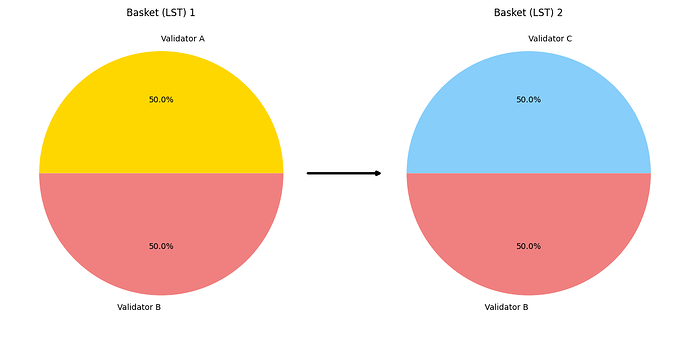

However, things begin to get interesting if LSTs are not completely different.

For example, in a similar scenario where the total voting power is 100tokens and the max rate of change is 33%. If we wanted to convert all 100tokens to a different LST, but the LST being converted to only results in 50% change in voting power, then it would only take half as long since the validator set is only changing by 50% and not 100%.

Similarly, each conversion does not always result in an increase in the percent change of the validator set. It’s equally possible that a conversion of LSTs reduces the change in the validator set, in which case the conversion is allowed to be performed instantly.

Updating Curations

Updating the compositions of a validator subset is an isomorphic problem to converting between LSTs, and thus must go in the same queue mechanism.

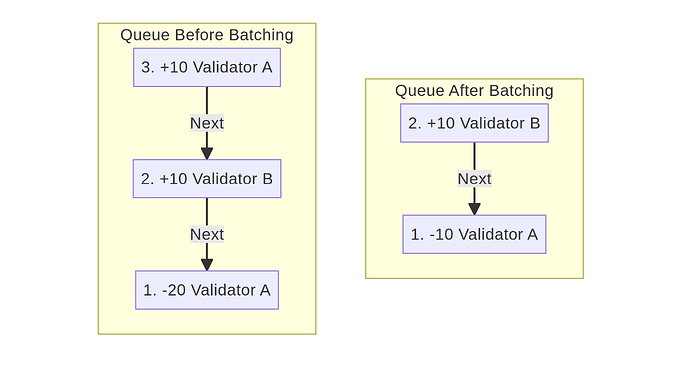

Further Optimizing Queue Processing by Cutting in Line

The processing of the queue can be further optimized by adding the ability to batch opposing conversions. For example, if there are two conversions in queue, the one at the top of the queue (the one that has to wait the longest) can be batched with one in the bottom of the queue (will be processed sooner) if it reduces the total change in the validator set when combined with the sooner one. This allows for those at the end of the queue to “cut in line”, which reduces the total size of the queue by merging two conversions, and reduces the total change in the validator set.

Batching is a complex problem that we likely don’t want the state machine to perform each block. Since users likely want to unbond or convert as fast as possible, this motivation will incentivize the manual searching for and paying for gas to batch unbondings/conversions in the queue.

Stopping Queue Spam

To stop users/bridges from filling the queue with unbounded amounts of conversions, and thus stopping all users from being able to convert their LSTs or unbond, there must be one more rule. This rule states that users must not be able to fill the queue with more conversions or unbondings than they have delegations. For example, if a user unbonds half of their delegations, but then attempts to convert all of their delegation to a different basket (aka LST), then there must be some arbitrary mechanism to prevent this. One implementation could be to cancel the unbonding/conversion that is earliest.

Incentivizing an Empty Queue

Identical to existing unbonding mechanisms, users/bridges will stop earning fees once entering the queue. This means that they are incentivized to only change the composition of their baskets or convert slowly or only if the queue is already empty.

Defending Against Monopolies

Monopolies are bad. Mainly because they are incentivized and fully capable of extracting an undue amount of value. In the case of LSTs, they also make attacking the native protocol cheaper. For example, if there were many LSTs, then an attacker would at least have to attack multiple LST protocols in order to change the validator set enough to attack.

LSTs are incentivized to become monopolies. Quite literally, an LST protocol will be able to capture more value if it becomes a monopoly. A common argument against LSTs is that the network effects of the new token also make it difficult for competing LSTs to gain traction.

In order to defend against monopolies, protocols must make forking and converting an LST profitable.

Using the combined mechanisms above, protocols significantly lower the cost to fork and convert an LST. Third parties can permissionlessly and seamlessly take the profits earned by the LST creator.

This is because the time it takes to convert between two LSTs is now a function of their composition and how much the validator set has changed. The more value that is captured by an LST, the more incentive there is to fork it. It is still possible to form a monopoly, however, only if that monopoly extracts less value than the cost to fork and convert it. This post does not estimate those costs to attempt to quantify the profitability of forking an LST.

Vampire Attacks on Popular LSTs

The following set of incentives for LST creators and delegators emerges:

- Pick a quality decentralized subset of validators, since it’s easy and they don’t want to get slashed (all forms of slashing, particularly social)

- Pick a similar set of validators to others, since that enables the ability to quickly convert between different LSTs

- Control their own LSTs, since that earns revenue

Let’s walk through the simplest example, a vampire attack. Assume there’s a popular LST that’s taking 10% of the revenue that goes to delegators. Anyone, including a bridge or lending protocol, could mirror that LST and either incentivize users or automatically convert them to use its own vampire LST. Since the LSTs are identical, the protocol enables them to be converted to instantly, and they are effectively fungible. Since the vampires are now the owners of the LST, they control the composition and get the revenue.

The original popular LST does have recourse! It can meaningfully change the composition of the LST so itself and the vampire no longer have identical compositions. This comes at a significant cost though. Meaningfully changing the validator set likely means entering the queue, which means it won’t be earning fees. Interestingly, this also risks losing users to other LSTs. This is because delegators wanting to convert to the popular LST would be changing the validator set further, and therefore must wait in the queue. However, the opposite occurs if users want to convert away from the popular LST to the vampire or other existing LST. This is because converting away from an LST that just changed the validator set reduces the difference in the validator set, and thus the converters would be able to skip the queue!

Further Incentives

Here are a few other random details/incentives/externalities.

MEV

As pointed out by Barry Plunkett, it is very likely that stakers will purposefully change the validator set to increase the time that it takes for others to convert an LST. For example, if there is an arbitrage between two LSTs that are not identical, MEV searchers can purposefully change the validator set significantly to increase the rate of change for that unbonding period. This means that conversions between non-identical LSTs must enter the queue.

There are many different factors here that need to be thought through in more detail. While filling the queue has an opportunity cost, the arbitrage has potential profits. The time it takes to convert between LSTs is highly dependent upon how the validator set has changed recently. If a given change in the validator set is net negative for the rate of change, then it can occur instantly.

This post does not cover this, but if you are interested in this topic please reach out to any of the authors.

External LSTs

It’s very difficult if not impossible to create an LST that cannot also be represented as a basket of validator tokens, especially considering that the only way to delegate would be by selecting validator tokens. As long as the LST is redeemable by users, then even external LSTs are forkable. If external LSTs limit the rate at which users can redeem their tokens, then users have more of an incentive to use an LST that does not have such a restriction, for example one created using the native tooling.

Give Inflation/Fees to Some Validators Outside of the Active Set

Protocols want validators to be incentivized to join the active set should the need arise. Giving fees to some validators outside the active set, let’s say 50, ensures that a large set can be included in a Basket without the basket losing out on funds. It also ensures that some validators are always ready to join the active set should the need arise.

Forcing the Usage of an LST for a Bridge

While definitely not required, bridges might also want to add the ability to restrict LSTs that are allowed to be transferred across. As mentioned earlier, if ICA is added, then there would be nothing that stops a bridge from converting the LSTs bridged across to ones that it chooses.

Conclusions

Upsides

- Arguably, the unbonding mechanism is significantly more secure than the existing one.

- Third parties get to capture the value that would otherwise be captured by an LST protocol.

- It becomes trivial to delegate to a large curated subset of validators.

- Every Bridge is incentivized to control its own LST(s), which means we will have much more decentralized control of the validator set. Besides avoiding the externalities of a monopoly, this might further increase the cost and difficulty of an attack.

- LST creators are incentivized to come to consensus over a quality decentralized set of validators.

Downsides

- While having the ability to seamlessly and permissionless fork an existing LST will almost certainly inhibit the monopolization of the validator set to some extent, it’s very unclear by how much.

- Since these mechanisms are setting in place multiple new incentives, there is essentially a guarantee that there will be unpredicted, and likely undesirable, externalities.

- This mechanism decreases the speed at which the validator set can change, and there is an incentive for LST creators to mirror each other. As stated above, mechanisms that don’t limit the rate of change of the validator set are not safe, so we will have to switch to something that does anyway.

- This mechanism might increase the size of the state machine. That being said, some LST logic will exist somewhere, and since that logic is critical to the security of the protocol, having it in a place where social consensus has the ability hardfork and fix bugs or socially slash might be worth it for the same reasons as keeping delegation logic inside the protocol. It’s also worth noting that the current staking and distribution mechanism is wildly inefficient. Its possible that the above mechanisms could actually simplify and reduce the state machine. For example, instead of every delegating claiming rewards frequently, rewards can be automatically deposited once into the collateral for each validator token.

- Proof of Stake is still fundamentally flawed, but if we switch unbonding mechanisms we can at least buy some time to properly find a solution.

Future Work

- There could be quite a bit of work done to find an optimal unbonding rate. The incentives almost certainly change with the rate, and how they change is unclear. In fact, while having the ability to fork an existing LST can only hurt monopolies, it’s very unclear to what extent.

- Actually fixing Proof of Stake.

- See MEV section