Brought to you by Electron Labs ![]()

#zkDAS

A simple concept note to discuss how we can zk-prove DAS.

Motivation -

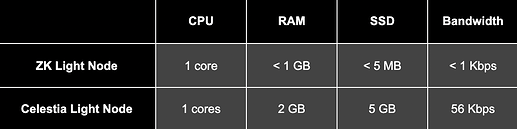

Celestia has very light node, but it can be made Ultra light, by orders of magnitude.

However, this requires zk-proving DAS, which is not well understood. Hence I am writing this article.

How can we ZK-prove data availability?

Background

The goal behind data availability sampling in the Celestia network is to provide guarantee that the data is available with the network.

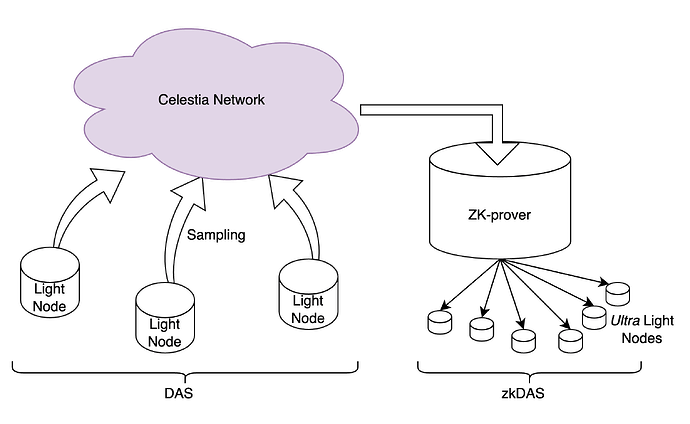

Celestia currently achieves this by having a large number of light clients participate in the network. The assumption is that if many light clients continuously sample the network for bits of data, together these light clients will sample enough data from the network resulting in very high probability that the data is available. Details of how this works is better explained in Celestia docs.

Our Solution

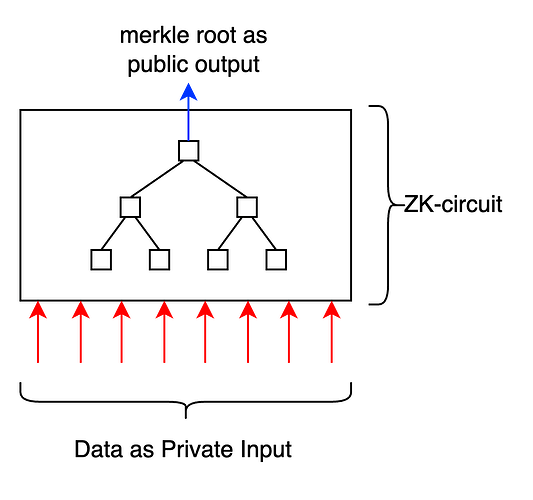

Let us now jump to solution where you can zk-prove DAS. Let us say that a certain entity (referred to as “DA prover” henceforth) in the Celestia network wants to prove that they have the data with them. Another entity (DA Verifier) will verify this claim and we assume that they have limited bandwidth and computational resources (say mobile phone or chrome extension). We assume that the merkle root of the said data is already publicly known.

To prove data availability, the DA prover can simply use a zk-circuit that takes in the required data as private inputs and calculates it’s merkle root. The merkle root is given out as a public output.

This circuit (in plain English) proves that -

The DA prover has all the pieces of data which when merklized results in the given merkle root.

Since the data is given as private input to the circuit, it does not have to be shared with the verifier. The DA verifier only needs the zk-proof and the merkle root to verify this claim. Interestingly, the computational load on the DA verifier does not increase as the data in the network increases. This is because zk-proofs (groth16) can be verified in constant time.

Note: The DA prover will only be able to construct this proof successfully if they have ALL the data with them. It will be impossible to construct this proof even if just 1 bit of the data is missing.

Question: What is the guarantee that the DA prover has all the block data?

Answer: The fact that proof was generated and successfully verified provides a guarantee that the data was available with the DA prover.

However, since Celestia is supposed to handle very large amounts of data, it maybe difficult to construct this zk-proof since groth16 does not scale well with large amounts of data. We can use proof recursion methods to solve this issue, which is discussed in later sections.

Benefits of zkDAS

Using zkDAS, we can construct a ZK based Ultra Light Client for the Celestia network. This will have many advantages -

- This will enable Celestia chain can to run at a lower Blocktime. It is my understanding that Celestia plans to use 11.66 s as the blocktime, and the reason we are not going any lower is to keep the burden on the light clients low.

- Have a large number of light clients in Celestia network is super important for the security of the network. Our approach will lighten the computational requirements from the Celestia light clients by orders of magnitude, enabling a much larger number of Celestia light nodes to exist, thereby improving security.

- This will make it possible to run the Celestia light client as an Ethereum smart contract. Given that Ethereum itself will be able to verify DA, this will massively increase the security for DA for Ethereum rollups, and may even unlock new possibilities.

- This model will not require any changes to Celestia core infrastructure. One can simply start running zk-provers for DAS.

- In fact, the current light clients for Celestia can continue to operator as is, without being aware of the zk-provers, and continue to provide security to the network.

Incentivising creation and operation of zkDAS nodes.

We believe that zkDAS can become part of the Celestia node infrastructure itself. Similar to Celestia validators, DA prover operators can earn rewards and tokens for generating valid zk-proofs. Having said that, I think other ideas can and should be explored.

How will the ZK-prover technology work?

Using proof recursion to make the ZK-prover feasible

Celestia needs to deal with large volumes of data. This will require a large merkle tree being constructed inside the zk-circuit. It is infeasible to perform this operation inside a single groth16 circuit due to -

- size of data that needs to be loaded to the circuit and

- For a 6.4GB of data, the hashing operations for calculating the merkle root will require 3.2 trillion constraints (reference - Benchmarking ZKP Development Frameworks: the Pantheon of ZKP - Ethereum Research), which is not feasible at all in groth16.

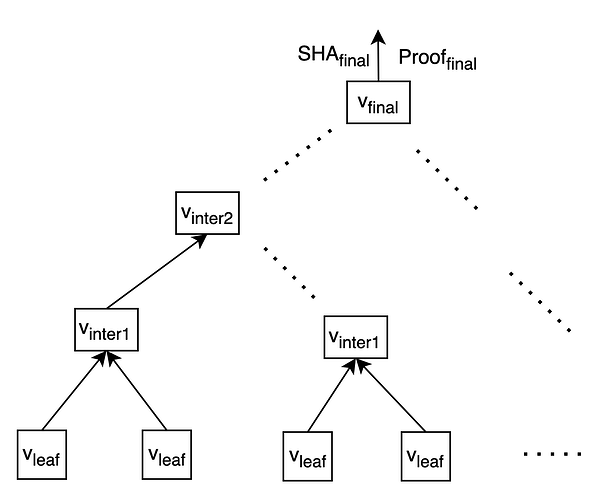

We believe this can be easily achieved using recursive proving schemes out there. We won’t compare different recursive systems with each other here, instead let us look at a circuit tree construction that can help us achieve this.

In the following section, we assume that the data is arranged as a large array and we shall discuss how we can calculate and prove the merkle root of the same. Let’s get started.

We start with a large array containing N items {item_{0}, item_{1} … item_{n-1}}. We assume that the verifier only has the merkle root (root\_hash) of this array. We want to create a zk-proof that the merkle root of the array is root\_hash.

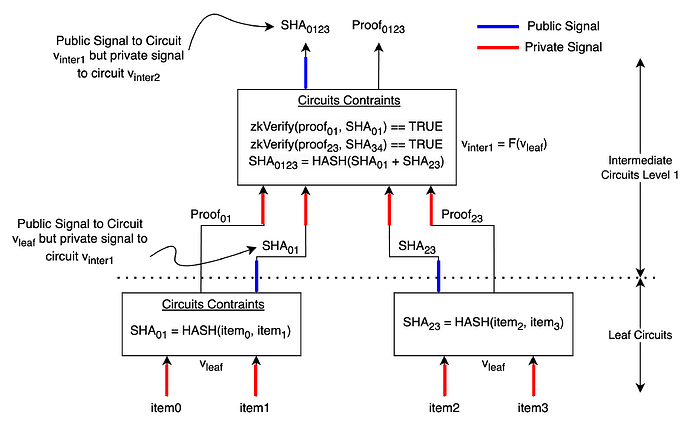

Let us start understanding this by starting with the leaf circuits.

Leaf Circuit -

Private Signals: item_0 , \space item_1

Public Signals: SHA_{01}

Circuits Constraints:

- HASH(item_0 , item_1) == SHA_{01}

Resultant Proof: SHA_{01}, Proof_{01}

This circuit proves that the prover is in possession of two inputs which when hashed together results in the value SHA_{01}.

Note: We must keep SHA_{01} as a public signal to ensure that Proof_{01} cannot be validated without SHA_{01}, thus establishing a link between the two. This is useful for upper intermediate circuits.

We can now take two such circuits and recursively combine them together into a single circuit as show in the intermediate circuits level 1 in the diagram above.

Intermediate Circuit Level 1 -

This is the circuit just above leaf circuits.

Private Signals: SHA_{01},\space Proof_{01}, \space SHA_{23},\space Proof_{23}

Public Signals: SHA_{0123}

Circuits Constraints:

- zkVerify(proof_{01}, SHA_{01}) == TRUE

- zkVerify(proof_{23}, SHA_{23}) == TRUE

- HASH(SHA_{01} , SHA_{23}) == SHA_{0123}

Resultant Proof: SHA_{0123}, Proof_{0123}

This circuit proves that the prover has have all the elements of an array whose merkle root is SHA_{0123}

We can easily scale out this concept to a larger number of leaf nodes as shown below -

Finally, the verifier can check if SHA_{final} == root\_hash and that the Proof_{final} is valid.

The final proof Proof_{final} proves that the DA prover has have all the elements of an array whose merkle root is SHA_{final}

Next Steps

- We invite the Celestia community to provide their thoughts about this. This is a very early concept note, and lot’s of community feedback will help us make it better, and find potential issues in the model. We would be happy to host a discussion within the Celestia community to dig deeper into this model.

- If the Celestia community is excited about this, then we should build this!